A Practical Ethical AI Strategy for Human-Centered Innovation

Why Ethical AI Matters in 2025 and Beyond

Comprehensive case study, focusing on ‘what’ and ‘how’ during this UX process

On the path to ethical AI: Deciphering the “AI ethics maturity model”

What Makes an AI Strategy Truly Ethical?

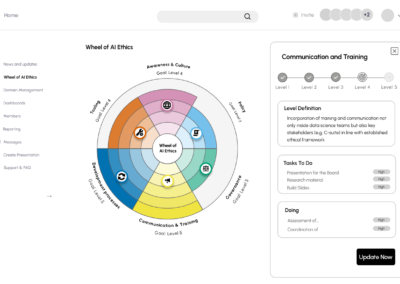

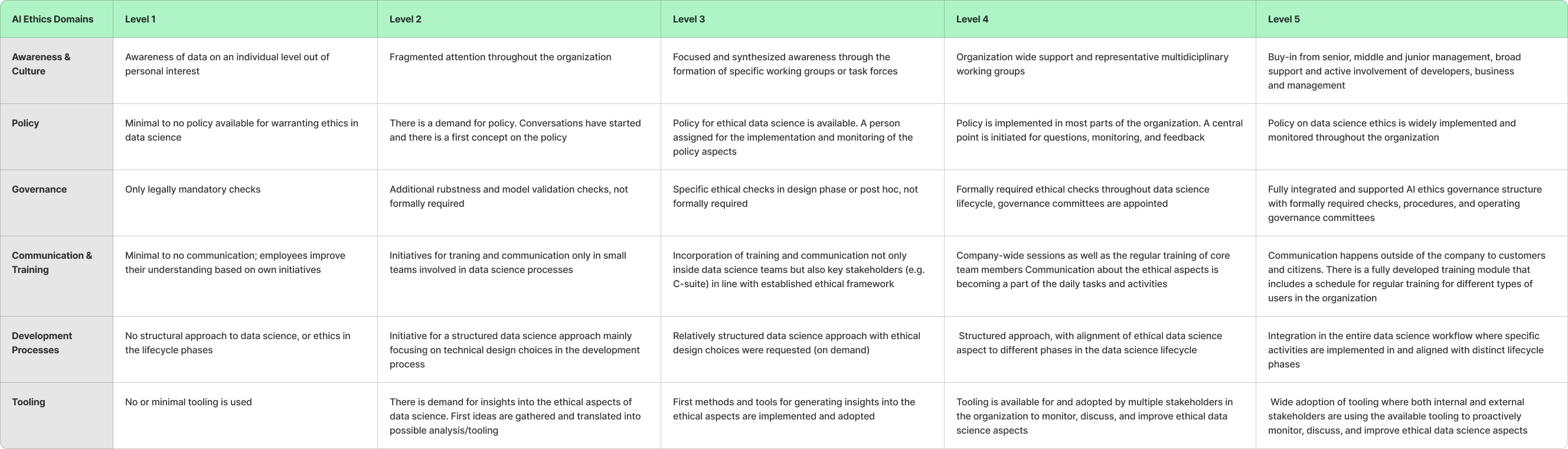

If you’re looking for an ethical AI strategy that balances innovation with responsibility, this guide gives you the tools to start now. In today’s rapidly evolving AI landscape, ethical considerations are paramount. We’ve developed an UX/UI prototype which interact with the AI Ethics Maturity Modell (model is developed by EDSA) to guide organisations towards responsible AI practices.

With the increasing influence of AI on various aspects of society, it is imperative for organisations to adopt a framework that facilitates ethical decision-making in AI development. The AI Ethics Maturity Model offers a structured approach to assess, refine, and strengthen AI-related processes, policies, and cultural norms.

The model places a strong emphasis on fostering awareness, ensuring accountability, and promoting transparency at every stage of the AI lifecycle. This approach seeks to maintain a delicate equilibrium between fostering innovation and safeguarding societal welfare.

Objective: Create a user-friendly demo of the AI Ethics Maturity Model for a seamless user experience. The aim is to actively engage users in exploring the model and receiving tailored guidance to enhance their understanding and implementation of ethical AI practices.

Challenges faced: Strategic planning and innovative solutions

Key challenges:

- Scope clarification and identifying ethical frameworks.

- Developing a structured assessment process for AI-related procedures and policies.

- Designing an intuitive and user-friendly interface for the model.

- Ensuring active engagement and garnering buy-in from stakeholders across various organizational hierarchies.

To overcome these hurdles, we employed a variety of strategic approaches.

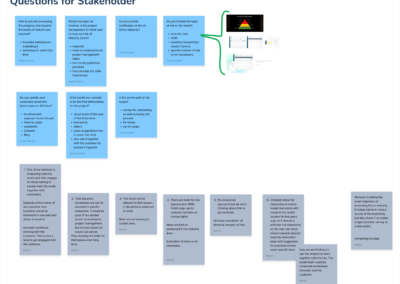

- We conducted comprehensive research and analysis to define the model’s scope and objectives. This involved extensive exploration of resources, including insights from InnoValor and stakeholder interviews. Additionally, we referred to the research report titled “The AI Ethics Maturity Model: A Holistic Approach to Advancing Ethical Data Science in Organizations” by J. Krijger and T. Thuis from Erasmus University, Rotterdam, Netherlands. The findings of this research were instrumental in shaping our understanding.

- We collaborated closely with ethical experts and stakeholders to identify pertinent frameworks and guidelines.

- We devised a structured assessment process that incorporated input from all stakeholders. This involved utilising a Stakeholder Interview template and maintaining ongoing communication and feedback loops with stakeholders.

- We implemented rigorous project management practices and fostered effective team coordination to facilitate seamless progress and communication throughout the project.

Through these efforts, we successfully navigated the challenges and propelled the project forward. Our UX Design Team, comprised of Carlotta Katahara, Martina Zekovic, Anna Ptasińska, Michael R Misurell, and myself, Jeroen, pooled our passion and expertise to craft a user experience we’re eager to share. We extend our gratitude to our partner, InnoValor, whose invaluable contributions have been instrumental in steering us towards our goal of ethical AI implementation. From digital identities to privacy and innovative business models, InnoValor leads the way in navigating the complexities of the digital landscape.

Source content table: research report titled “The AI Ethics Maturity Model: A Holistic Approach to Advancing Ethical Data Science in Organizations” by J. Krijger and T. Thuis from Erasmus University, Rotterdam, Netherlands. Here you can find this research.

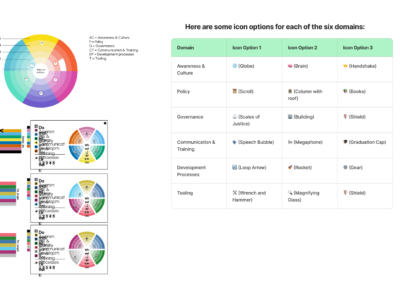

Understanding the 6 domains of AI ethics and the importance of ethical AI tools

Domains of AI Ethics: An overview

Understanding AI and its Ethical Imperative. People should know how it can affect their lives and why it’s important to use it wisely.

Establishing rules for AI usage. This includes laws and regulations determining who is responsible if something goes wrong.

Governance means there are people in charge of AI who make decisions about its use. This ensures that AI is used responsibly.

Ethical interaction with AI: a necessity. This includes training and communication about what is and isn’t acceptable when using AI.

Developing AI fairly: ensuring unbiased results. This must be done fairly and reliably to ensure that the results are not biased or discriminatory.

Tools are the programs and technologies used to create and use AI. These must be carefully chosen to ensure they are ethically sound and respect people’s privacy.

Consequences of neglecting AI ethics

1. Lack of understanding of ethical implications

People may not grasp how AI technology can misuse personal data. For instance, if individuals are unaware of how their data is collected and used by AI systems, they might unknowingly consent to intrusive data practices, leading to privacy breaches.

2. Ignorance or apathy towards ethical issues

Individuals might overlook the privacy risks associated with smart devices. For instance, if users disregard privacy policies or fail to update security settings on IoT devices, they could inadvertently expose sensitive personal information, making them vulnerable to cyber threats and data exploitation.

3. Insufficient awareness of AI’s societal impact

People may not fully comprehend how AI decisions can impact their lives. For example, if individuals are unaware of algorithmic bias in hiring processes, they might not recognize discriminatory practices that perpetuate inequality and hinder opportunities for underrepresented groups, exacerbating social disparities.

1. Absence of clear ethical guidelines or policies

Without clear rules on data usage, AI companies may exploit user data for profit-driven purposes. For instance, in the absence of regulations governing data sharing, companies might sell user data to third parties without user consent, compromising individual privacy and undermining trust in AI systems.

2. Lack of regulatory frameworks for AI ethics

In the absence of laws prohibiting discriminatory AI systems, companies may develop biased algorithms that perpetuate systemic inequalities. For instance, without regulations mandating fairness and transparency, AI systems in hiring processes may discriminate against certain demographic groups, leading to unfair employment practices and social marginalization.

3. Policy gaps allowing unethical AI practices

Policy loopholes may enable companies to deploy AI for manipulative purposes without facing consequences. For example, in the absence of regulations on targeted advertising, companies might use AI algorithms to manipulate consumer behavior and preferences, exploiting vulnerabilities and fostering addictive behaviours detrimental to individual well-being.

1. Inadequate oversight and governance

Inadequate oversight may allow companies to develop AI systems without accountability, risking misuse and abuse. For instance, without regulatory bodies monitoring AI development, companies might deploy biased algorithms in automated decision-making processes, leading to unjust outcomes and reinforcing systemic biases in society.

2. Insufficient accountability for AI decisions

Lack of accountability may result in companies evading responsibility for harmful AI practices. For example, if companies refuse to acknowledge their role in algorithmic discrimination, affected individuals may lack recourse for challenging biased decisions, perpetuating injustice and eroding public trust in AI technologies.

3. Lack of transparency in decision-making processes

Without transparency, companies can deploy AI algorithms without disclosing their inner workings, leading to opacity and mistrust. For instance, if companies use proprietary algorithms in credit scoring without disclosing the factors considered, individuals may be unfairly denied access to financial opportunities, exacerbating economic disparities and fostering resentment.

1. Lack of training in ethical AI practices

Developers may lack understanding of how to integrate ethical considerations into AI systems. For example, if developers are not trained on bias mitigation techniques, they may inadvertently embed discriminatory patterns in AI algorithms, perpetuating systemic biases and reinforcing social inequalities.

2. Poor communication among stakeholders

Lack of communication can result in misunderstandings about data usage and privacy concerns. For instance, if companies fail to communicate transparently about their data practices, users may distrust AI systems, leading to reduced adoption and hindering the potential benefits of AI-driven technologies in various domains.

3. Values and priorities mismatch

Misalignment between profit-driven objectives and ethical principles may lead to prioritization of financial gains over ethical considerations in AI development. For example, if companies prioritize maximising revenue over ensuring fairness and transparency in AI systems, they may overlook potential harms and neglect societal well-being, undermining the ethical integrity of AI technologies.

1. Bias in AI algorithms

Biased algorithms may perpetuate discrimination and reinforce existing societal inequalities. For instance, if AI systems trained on biased datasets are deployed in recruitment processes, they may favour certain demographic groups while disadvantaging others, perpetuating systemic biases in employment opportunities and exacerbating social disparities.

2. Lack of diversity in development teams

Homogeneous development teams may overlook diverse perspectives, leading to biased design decisions in AI systems. For example, if AI development teams lack representation from minority groups, they may unintentionally overlook cultural nuances and inadvertently embed discriminatory biases in AI algorithms, perpetuating systemic inequalities.

3. Hasty or inadequate testing of AI systems

Insufficient testing may overlook flaws and vulnerabilities in AI systems, leading to unintended consequences. For instance, if AI systems are rushed to deployment without rigorous testing, they may exhibit unpredictable behaviour or fail to perform reliably, posing risks to user safety and undermining trust in AI technologies.

1. Ineffective AI monitoring tools

Inadequate monitoring tools may fail to detect misuse or errors in AI systems, compromising integrity and reliability. For example, if AI monitoring systems lack the capability to identify algorithmic biases or security vulnerabilities, companies may overlook systemic issues and fail to address potential risks, leading to adverse outcomes and reputational damage.

2. Vulnerabilities in AI systems

Vulnerable AI systems may be susceptible to exploitation by malicious actors, posing security threats and privacy risks. For example, if AI systems have inherent vulnerabilities or backdoors, hackers may exploit them to manipulate outcomes, steal sensitive data, or disrupt critical operations, causing significant harm to individuals and organisations.

3. Lack of user-friendly ethical AI tools

Complex interfaces may hinder user understanding and adoption of ethical AI tools, limiting their effectiveness. For example, if ethical AI tools have convoluted user interfaces or require specialised technical knowledge, users may struggle to navigate them effectively, impeding their ability to integrate ethical considerations into AI development processes.

Charting the course of ethical AI: Insights unveiled

In the bustling realm of artificial intelligence, where innovation meets ethics, our journey began. With the EU crafting new regulations, the need for ethical AI practices became paramount. InnoValor, a pioneer in the field, sought a guiding tool to navigate this shifting landscape.

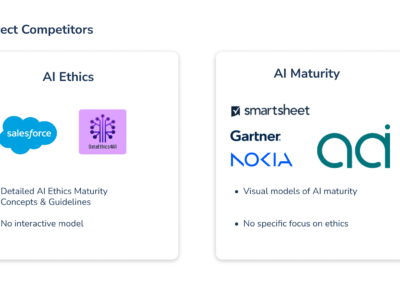

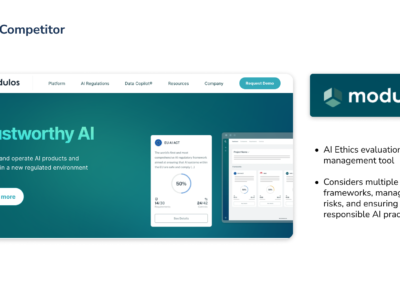

As we embarked on our design sprint, the scope became clear: fashion an interactive model for AI ethics maturity. Our path intersected with competitors like Salesforce and Smartsheet, but our fusion of ethics and maturity set us apart.

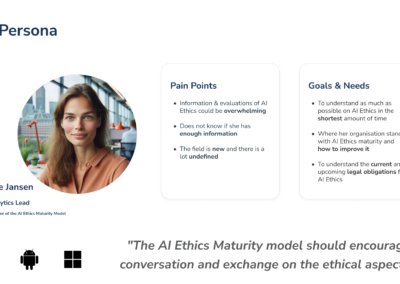

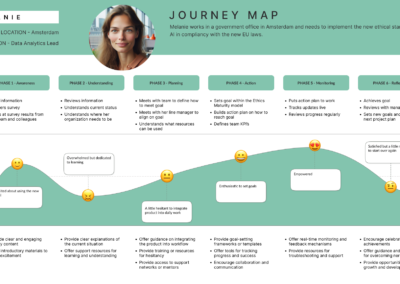

User interviews became our compass, revealing the intricate needs of individuals like Melanie Jansen, a Lead Data Analyst navigating the complexities of AI ethics. Her struggles with overwhelming information and the ambiguous nature of the field illuminated our path forward.

Our toolkit expanded to include stakeholder interviews and competitor analyses. Each component added depth to our understanding and propelled us closer to our goal.

Privacy notice: due to privacy and confidentiality, detailed UX research findings have been omitted.

Crafting a vision

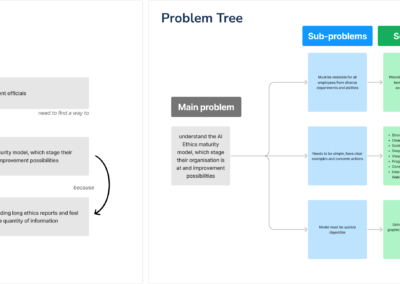

Problem statement: Government officials need to find a way to understand the AI Ethics maturity model, which stage their organization is at and improvement possibilities because they don’t want to lose time reading long ethics reports and feel overwhelmed about the quantity of information.

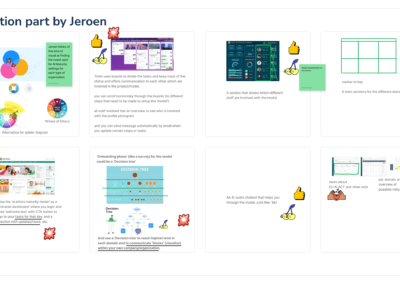

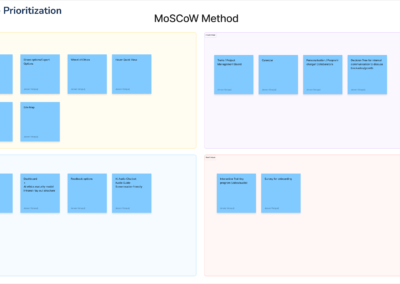

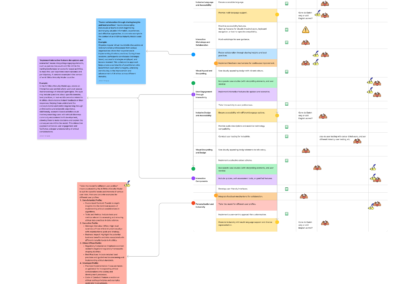

In delving into the essence of our design journey, we set out with a strategic mindset, weaving together conceptual models Problem Tree and iterative processes Ideation. Our focus was not just on creating aesthetically pleasing visuals, but on crafting a solution that resonated with real-world challenges and commercial dynamics.

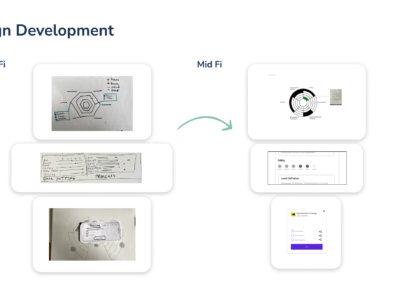

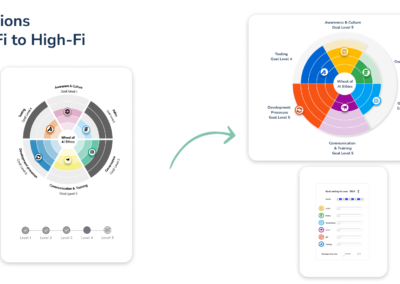

Feature Prioritization guided our decisions as we navigated through the labyrinth of design possibilities, ensuring that the most crucial elements took precedence. From the nascent stages of low-fidelity sketches to the refined iterations of high-fidelity prototypes, each step was a testament to our commitment to excellence.

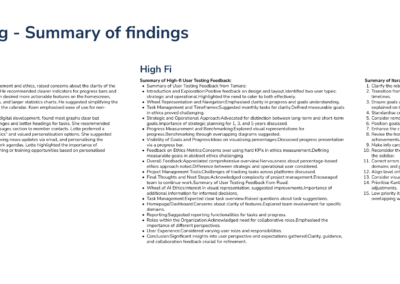

As we skipped Low-fi user testing because of lack of time, we compensated by conducting thorough Mid-fi and Hi-fi User Testing. Each session provided valuable insights that fuelled subsequent iterations, fine-tuning our solution to perfection. Moreover, ‘Research on appealing and engaging AI Ethics Maturity Model’ served as a touchstone for infusing user-centric elements into our design.

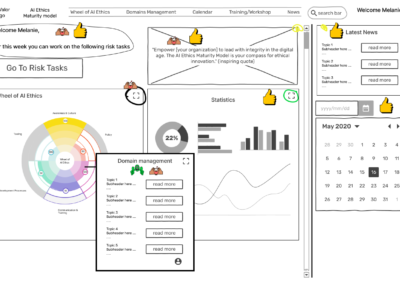

Our design journey encapsulates a Userflow “Happy Path”, an experiential route meticulously crafted to ensure seamless user interaction. This user-centric approach not only enhances customer control but also adds a human touch to our designs, understanding that technology should serve as an enabler rather than a barrier to human connection.

Throughout our journey, we remained cognizant of the need for easy content creation, recognising that our solution should streamline rather than complicate the process. We iterated tirelessly, incorporating Brand styling to ensure a visually compelling and cohesive design.

In essence, our design and development process was a testament to our strategic foresight, blending artistry with pragmatism to create a solution that not only addressed the challenges of today but also laid the foundation for a brighter tomorrow.

Bridging design and development

As we crafted our vision for the UX/UI prototype for interaction with the AI Ethics Maturity Model, we embarked on a journey of creativity and innovation. Through meticulous design and development, we sculpted a framework that aimed to revolutionize ethical innovation.

Key learnings and future collaboration

Reflecting on our journey, we gleaned invaluable insights. It’s paramount to align with stakeholders from the outset and conduct thorough research in emerging fields like AI ethics. Our journey was marked by continuous iteration based on user feedback, harnessing the collective strength of our team.

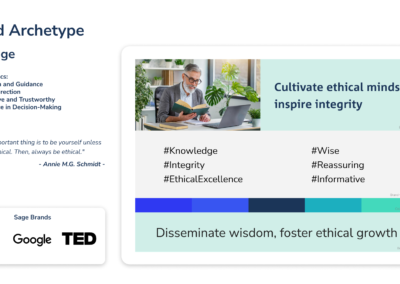

How did I take ownership to enhance our visual showcase?

Following the completion of the sprint design project, I independently expanded our presentation with additional slides aimed at providing in-depth explanations to potential clients of our AI ethics model. These slides were meticulously crafted to offer a comprehensive overview of collaborative strategies and team dynamics within our organisation.

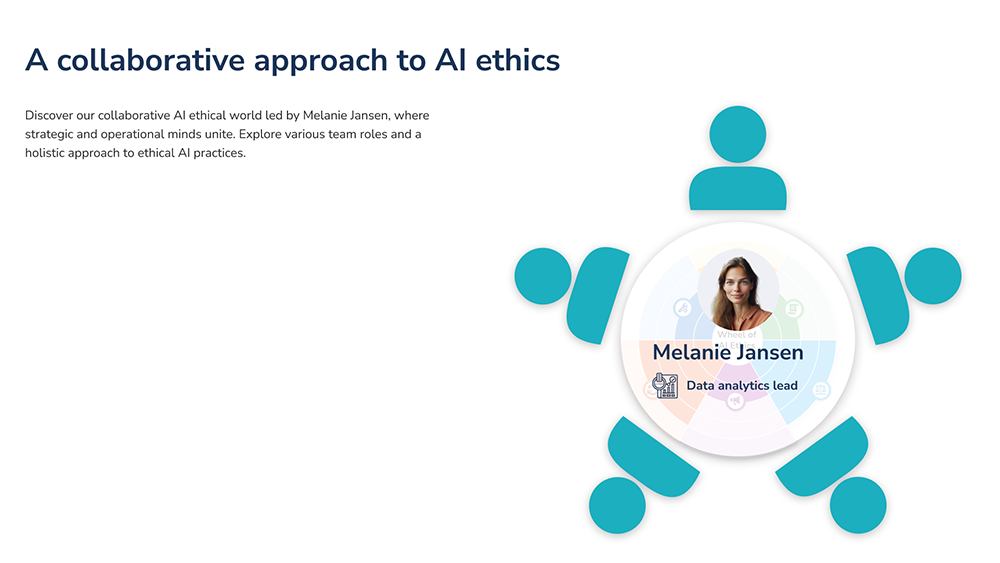

Navigating AI Ethics: collaborative strategies

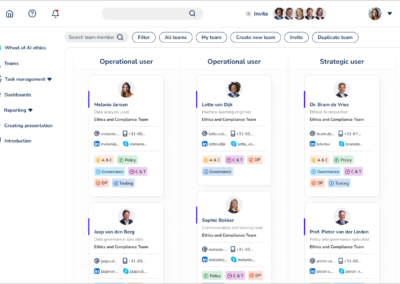

In this visual representation, we delve into the various user types, strategic and operational approaches, and collaborative roles within the organisation. The aim is to offer viewers a clear and engaging overview of how the model operates and how different teams collaborate to promote ethical decision-making. Through illustrating strategic and operational objectives, showcasing each team’s role within the model, and emphasising the importance of diverse expertise, we communicate the complex dynamics of AI ethics in an accessible manner. This visual representation underscores the significance of collaboration within the organisation.

Collaboration in AI Ethics: A collective approach

This slide portrays the environment where strategic and operational users, under the leadership of Melanie Jansen, collaborate on AI ethics goals. It highlights diverse roles and expertise within the team for a holistic approach to ethical AI practices. Visual elements include a central user surrounded by team members, a round table symbolising the “Round Table” concept, and the integration of the “Wheel of AI Ethics” on the table. This illustration signifies collaboration and the importance of diverse perspectives.

Furthermore, it’s important to note that the entire AI ethics model was translated into Dutch by myself, addressing the client’s language preferences. Despite encountering minor challenges due to some components not being set in ‘auto-layout’, resulting in manual adjustments, the model was meticulously adapted to fit seamlessly into the Dutch context.

Site Map AI Ethics Maturity Model

In addition to the presented components, I’ve crafted a ‘Site Map AI Ethics Maturity Model’. It offers stakeholders a comprehensive visualisation, providing deeper insight into the model’s entirety.

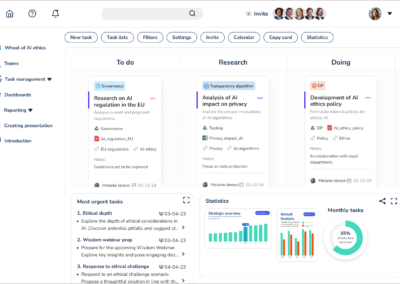

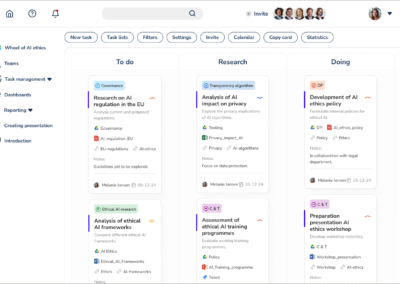

Task Management Screen

This screen offers an insight into how tasks related to AI ethics are managed within our model. From assigning tasks to tracking progress, it demonstrates our efficient task management system designed to ensure accountability and transparency in ethical AI practices.

Stakeholder feedback and future plans

Stakeholders lauded the comprehensive approach and user-centric design showcased in our final presentation. They expressed enthusiasm for the Figma prototype demonstration, highlighting its potential to revolutionise AI ethics practices. Plans are underway to leverage the prototype for client demonstrations and further refinement.

Conclusion

In conclusion, our journey blended innovation and empathy seamlessly, resulting in a design that exceeded expectations. Our endeavour symbolises a shift in ethical innovation, leaving a lasting impact.

The Final Presentation, Iteration, and User Testing were pivotal in our success, providing a roadmap for transformation. Reflecting on our journey, we’ve learned valuable lessons in stakeholder alignment, ongoing research, user-centric iteration, harnessing team diversity, and effective time management.

As a gesture of gratitude, I provided InnoValor with an extended Dutch version of the AI Ethics Maturity Model prototype in Figma and shared a recording of our presentation. We eagerly await InnoValor’s future plans.

Seeking your perspective

Do you agree with the highlighted lessons learned during our project, or do you think there are other important aspects to consider?

How do you see the future of ethical innovation evolving within UX design, and what role do you think such models will play in it?